全讯网999,离婚问题在线咨询,寻根问底

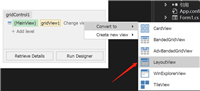

工程目录结构

spiders下的first源码

# -*- coding: utf-8 -*-

import scrapy

from firstblood.items import firstblooditem

class firstspider(scrapy.spider):

#爬虫文件的名称

#当有多个爬虫文件时,可以通过名称定位到指定的爬虫文件

name = 'first'

#allowed_domains 允许的域名 跟start_url互悖

#allowed_domains = ['www.xxx.com']

#start_url 请求的url列表,会被自动的请求发送

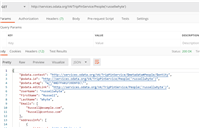

start_urls = ['https://www.qiushibaike.com/text/']

def parse(self, response):

'''

解析请求的响应

可以使用正则,xpath ,因为scrapy 集成了xpath,建议使用xapth

解析得到一个selector

:param response:

:return:

'''

all_data = []

div_list=response.xpath('//div[@id="content-left"]/div')

for div in div_list:

#author=div.xpath('./div[1]/a[2]/h2/text()')#author 拿到的不是之前理解的源码数据而

# 是selector对象,我们只需将selector类型对象下的data对象拿到即可

#author=author[0].extract()

#如果存在匿名用户时,将会报错(匿名用户的数据结构与登录的用户名的数据结构不一样)

''' 改进版'''

author = div.xpath('./div[1]/a[2]/h2/text()| ./div[1]/span[2]/h2/text()')[0].extract()

content=div.xpath('.//div[@class="content"]/span//text()').extract()

content=''.join(content)

#print(author+':'+content.strip(' \n \t '))

#基于终端的存储

# dic={

# 'author':author,

# 'content':content

# }

# all_data.append(dic)

# return all_data

#持久化存储的两种方式

#1 基于终端指令:parse方法有一个返回值

#scrapy crawl first -o qiubai.csv --nolog

#终端指令只能存储json,csv,xml等格式文件

#2基于管道

item = firstblooditem()#循环里面,每次实例化一个item对象

item['author']=author

item['content']=content

yield item #将item提交给管道

items文件

# -*- coding: utf-8 -*-

# define here the models for your scraped items

#

# see documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class firstblooditem(scrapy.item):

# define the fields for your item here like:

# name = scrapy.field()

#item类型对象 万能对象,可以接受任意类型属性,字符串,json等

author = scrapy.field()

content = scrapy.field()

pipeline文件

# -*- coding: utf-8 -*-

# define your item pipelines here

#

# don't forget to add your pipeline to the item_pipelines setting

# see: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

#只要涉及持久化存储的相关操作代码都需要写在该文件种

class firstbloodpipeline(object):

fp=none

def open_spider(self,spider):

print('开始爬虫')

self.fp=open('./qiushibaike.txt','w',encoding='utf-8')

def process_item(self, item, spider):

'''

处理item

:param item:

:param spider:

:return:

'''

self.fp.write(item['author']+':'+item['content'])

print(item['author'],item['content'])

return item

def close_spider(self,spider):

print('爬虫结束')

self.fp.close()

setting文件

# -*- coding: utf-8 -*-

# scrapy settings for firstblood project

#

# for simplicity, this file contains only settings considered important or

# commonly used. you can find more settings consulting the documentation:

#

# https://doc.scrapy.org/en/latest/topics/settings.html

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html

bot_name = 'firstblood'

spider_modules = ['firstblood.spiders']

newspider_module = 'firstblood.spiders'

# crawl responsibly by identifying yourself (and your website) on the user-agent

user_agent = 'mozilla/5.0 (windows nt 6.1; win64; x64) applewebkit/537.36 (khtml, like gecko) chrome/70.0.3538.77 safari/537.36'

# obey robots.txt rules

#默认为true ,改为false 不遵从robots协议 反爬

robotstxt_obey = false

# configure maximum concurrent requests performed by scrapy (default: 16)

#concurrent_requests = 32

# configure a delay for requests for the same website (default: 0)

# see https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

# see also autothrottle settings and docs

#download_delay = 3

# the download delay setting will honor only one of:

#concurrent_requests_per_domain = 16

#concurrent_requests_per_ip = 16

# disable cookies (enabled by default)

#cookies_enabled = false

# disable telnet console (enabled by default)

#telnetconsole_enabled = false

# override the default request headers:

#default_request_headers = {

# 'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'accept-language': 'en',

#}

# enable or disable spider middlewares

# see https://doc.scrapy.org/en/latest/topics/spider-middleware.html

#spider_middlewares = {

# 'firstblood.middlewares.firstbloodspidermiddleware': 543,

#}

# enable or disable downloader middlewares

# see https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

#downloader_middlewares = {

# 'firstblood.middlewares.firstblooddownloadermiddleware': 543,

#}

# enable or disable extensions

# see https://doc.scrapy.org/en/latest/topics/extensions.html

#extensions = {

# 'scrapy.extensions.telnet.telnetconsole': none,

#}

# configure item pipelines

# see https://doc.scrapy.org/en/latest/topics/item-pipeline.html

item_pipelines = {

'firstblood.pipelines.firstbloodpipeline': 300,#300 为优先级

}

# enable and configure the autothrottle extension (disabled by default)

# see https://doc.scrapy.org/en/latest/topics/autothrottle.html

#autothrottle_enabled = true

# the initial download delay

#autothrottle_start_delay = 5

# the maximum download delay to be set in case of high latencies

#autothrottle_max_delay = 60

# the average number of requests scrapy should be sending in parallel to

# each remote server

#autothrottle_target_concurrency = 1.0

# enable showing throttling stats for every response received:

#autothrottle_debug = false

# enable and configure http caching (disabled by default)

# see https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#httpcache_enabled = true

#httpcache_expiration_secs = 0

#httpcache_dir = 'httpcache'

#httpcache_ignore_http_codes = []

#httpcache_storage = 'scrapy.extensions.httpcache.filesystemcachestorage'

如对本文有疑问,请在下面进行留言讨论,广大热心网友会与你互动!! 点击进行留言回复

Blazor server side 自家的一些开源的, 实用型项目的进度之 CEF客户端

.NET IoC模式依赖反转(DIP)、控制反转(Ioc)、依赖注入(DI)

vue+.netcore可支持业务代码扩展的开发框架 VOL.Vue 2.0版本发布

网友评论