吉林市人事考试网,保健食品批号查询,冰凌檬

标签(空格分隔): quartz.net job

最近工作上要用job,公司的job有些不满足个人的使用,于是就想自己搞一个job站练练手,网上看了一下,发现quartz,于是就了解了一下。

目前个人使用的是asp.net core,在core2.0下面进行的开发。

第一版自己简单的写了一个调度器。

public static class schedulermanage

{

private static ischeduler _scheduler = null;

private static object obj = new object();

public static ischeduler scheduler

{

get

{

var scheduler = _scheduler;

if (scheduler == null)

{

//在这之前有可能_scheduler被改变了scheduler用的还是原来的值

lock (obj)

{

//这里读取最新的内存里面的值赋值给scheduler,保证读取到的是最新的_scheduler

scheduler = volatile.read(ref _scheduler);

if (scheduler == null)

{

scheduler = getscheduler().result;

volatile.write(ref _scheduler, scheduler);

}

}

}

return scheduler;

}

}

public static async task<baseresponse> runjob(ijobdetail job, itrigger trigger)

{

var response = new baseresponse();

try

{

var isexist = await scheduler.checkexists(job.key);

var time = datetimeoffset.now;

if (isexist)

{

//恢复已经存在任务

await scheduler.resumejob(job.key);

}

else

{

time = await scheduler.schedulejob(job, trigger);

}

response.issuccess = true;

response.msg = time.tostring("yyyy-mm-dd hh:mm:ss");

}

catch (exception ex)

{

response.msg = ex.message;

}

return response;

}

public static async task<baseresponse> stopjob(jobkey jobkey)

{

var response = new baseresponse();

try

{

var isexist = await scheduler.checkexists(jobkey);

if (isexist)

{

await scheduler.pausejob(jobkey);

}

response.issuccess = true;

response.msg = "暂停成功!!";

}

catch (exception ex)

{

response.msg = ex.message;

}

return response;

}

public static async task<baseresponse> deljob(jobkey jobkey)

{

var response = new baseresponse();

try

{

var isexist = await scheduler.checkexists(jobkey);

if (isexist)

{

response.issuccess = await scheduler.deletejob(jobkey);

}

}

catch (exception ex)

{

response.issuccess = false;

response.msg = ex.message;

}

return response;

}

private static async task<ischeduler> getscheduler()

{

namevaluecollection props = new namevaluecollection() {

{"quartz.serializer.type", "binary" }

};

stdschedulerfactory factory = new stdschedulerfactory(props);

var scheduler = await factory.getscheduler();

await scheduler.start();

return scheduler;

}

}

简单的实现了,动态的运行job,暂停job,添加job。弄完以后,发现貌似没啥问题,只要自己把运行的job信息找张表存储一下,好像都ok了。

轮到发布的时候,突然发现现实机器不止一台,是通过nigix进行反向代理。突然发现以下几个问题:

1,多台机器很有可能一个job在多台机器上运行。

2,当进行部署的时候,必须得停掉机器,如何在机器停掉以后重新部署的时候自动恢复正在运行的job。

3,如何均衡的运行所有job。

1,第一个问题:由于是经过nigix的反向代理,添加job和运行job只能落到一台服务器上,基本没啥问题。个人控制好runjob的接口,运行了一次,把jobdetail的那张表的运行状态改成已运行,也就不存在多个机器同时运行的情况。

2,在第一个问题解决的情况下,由于我们公司的nigix反向代理的逻辑是:均衡策略。所以均衡运行所有job都没啥问题。

3,重点来了!!!!

如何在部署的时候恢复正在运行的job?

由于我们已经有了一张jobdetail表。里面可以获取到哪些正在运行的job。wome我们把他找出来直接在程序启动的时候运行一下不就好了吗嘛。

下面是个人实现的:

//hostedservice,在主机运行的时候运行的一个服务

public class hostedservice : ihostedservice

{

public hostedservice(ischedulerjob schedulercenter)

{

_schedulerjob = schedulercenter;

}

private ischedulerjob _schedulerjob = null;

public async task startasync(cancellationtoken cancellationtoken)

{

loghelper.writelog("开启hosted+env:"+env);

var reids= new redisoperation();

if (reids.setnx("redisjoblock", "1"))

{

await _schedulerjob.startallruningjob();

}

reids.expire("redisjoblock", 300);

}

public async task stopasync(cancellationtoken cancellationtoken)

{

loghelper.writelog("结束hosted");

var redis = new redisoperation();

if (redis.redisexists("redisjoblock"))

{

var count=redis.delkey("redisjoblock");

loghelper.writelog("删除reidskey-redisjoblock结果:" + count);

}

}

}

//注入用的特性

[servicedescriptor(typeof(ischedulerjob), servicelifetime.transient)]

public class schedulercenter : ischedulerjob

{

public schedulercenter(ischedulerjobfacade schedulerjobfacade)

{

_schedulerjobfacade = schedulerjobfacade;

}

private ischedulerjobfacade _schedulerjobfacade = null;

public async task<baseresponse> deljob(schedulerjobmodel jobmodel)

{

var response = new baseresponse();

if (jobmodel != null && jobmodel.jobid != 0 && jobmodel.jobname != null)

{

response = await _schedulerjobfacade.modify(new schedulerjobmodifyrequest() { jobid = jobmodel.jobid, dataflag = 0 });

if (response.issuccess)

{

response = await schedulermanage.deljob(getjobkey(jobmodel));

if (!response.issuccess)

{

response = await _schedulerjobfacade.modify(new schedulerjobmodifyrequest() { jobid = jobmodel.jobid, dataflag = 1 });

}

}

}

else

{

response.msg = "请求参数有误";

}

return response;

}

public async task<baseresponse> runjob(schedulerjobmodel jobmodel)

{

if (jobmodel != null)

{

var jobkey = getjobkey(jobmodel);

var trigglebuilder = triggerbuilder.create().withidentity(jobmodel.jobname + "trigger", jobmodel.jobgroup).withcronschedule(jobmodel.jobcron).startat(jobmodel.jobstarttime);

if (jobmodel.jobendtime != null && jobmodel.jobendtime != new datetime(1900, 1, 1) && jobmodel.jobendtime == new datetime(1, 1, 1))

{

trigglebuilder.endat(jobmodel.jobendtime);

}

trigglebuilder.forjob(jobkey);

var triggle = trigglebuilder.build();

var data = new jobdatamap();

data.add("***", "***");

data.add("***", "***");

data.add("***", "***");

var job = jobbuilder.create<schedulerjob>().withidentity(jobkey).setjobdata(data).build();

var result = await schedulermanage.runjob(job, triggle);

if (result.issuccess)

{

var response = await _schedulerjobfacade.modify(new schedulerjobmodifyrequest() { jobid = jobmodel.jobid, jobstate = 1 });

if (!response.issuccess)

{

await schedulermanage.stopjob(jobkey);

}

return response;

}

else

{

return result;

}

}

else

{

return new baseresponse() { msg = "job名称为空!!" };

}

}

public async task<baseresponse> stopjob(schedulerjobmodel jobmodel)

{

var response = new baseresponse();

if (jobmodel != null && jobmodel.jobid != 0 && jobmodel.jobname != null)

{

response = await _schedulerjobfacade.modify(new schedulerjobmodifyrequest() { jobid = jobmodel.jobid, jobstate = 2 });

if (response.issuccess)

{

response = await schedulermanage.stopjob(getjobkey(jobmodel));

if (!response.issuccess)

{

response = await _schedulerjobfacade.modify(new schedulerjobmodifyrequest() { jobid = jobmodel.jobid, jobstate = 2 });

}

}

}

else

{

response.msg = "请求参数有误";

}

return response;

}

private jobkey getjobkey(schedulerjobmodel jobmodel)

{

return new jobkey($"{jobmodel.jobid}_{jobmodel.jobname}", jobmodel.jobgroup);

}

public async task<baseresponse> startallruningjob()

{

try

{

var joblistresponse = await _schedulerjobfacade.querylist(new schedulerjoblistrequest() { dataflag = 1, jobstate = 1, environment=kernel.environment.tolower() });

if (!joblistresponse.issuccess)

{

return joblistresponse;

}

var joblist = joblistresponse.models;

foreach (var job in joblist)

{

await runjob(job);

}

return new baseresponse() { issuccess = true, msg = "程序启动时,启动所有运行中的job成功!!" };

}

catch (exception ex)

{

loghelper.writeexceptionlog(ex);

return new baseresponse() { issuccess = false, msg = "程序启动时,启动所有运行中的job失败!!" };

}

}

}

在程序启动的时候,把所有的job去运行一遍,当中对于多次运行的用到了redis的分布式锁,现在启动的时候锁住,不让别人运行,在程序卸载的时候去把锁释放掉!!感觉没啥问题,主要是可能负载均衡有问题,全打到一台服务器上去了,勉强能够快速的打到效果。当然高可用什么的就先牺牲掉了。

坑点又来了

大家知道,在稍微大点的公司,运维和开发是分开的,公司用的daoker进行部署,在程序停止的时候,不会调用

hostedservice的stopasync方法!!

当时心里真是一万个和谐和谐奔腾而过!!

个人也就懒得和运维去扯这些东西了。最后的最后就是:设置一个redis的分布式锁的过期时间,大概预估一个部署的时间,只要在部署直接,锁能够在就行了,然后每次部署的间隔要大于锁过期时间。好麻烦,说多了都是泪!!

public async task<ischeduler> getscheduler()

{

var properties = new namevaluecollection();

properties["quartz.serializer.type"] = "binary";

//存储类型

properties["quartz.jobstore.type"] = "quartz.impl.adojobstore.jobstoretx, quartz";

//表明前缀

properties["quartz.jobstore.tableprefix"] = "qrtz_";

//驱动类型

properties["quartz.jobstore.driverdelegatetype"] = "quartz.impl.adojobstore.sqlserverdelegate, quartz";

//数据库名称

properties["quartz.jobstore.datasource"] = "scheduljob";

//连接字符串data source = myserveraddress;initial catalog = mydatabase;user id = myusername;password = mypassword;

properties["quartz.datasource.scheduljob.connectionstring"] = "data source =.; initial catalog = scheduljob;user id = sa; password = *****;";

//sqlserver版本(core下面已经没有什么20,21版本了)

properties["quartz.datasource.scheduljob.provider"] = "sqlserver";

//是否集群,集群模式下要设置为true

properties["quartz.jobstore.clustered"] = "true";

properties["quartz.scheduler.instancename"] = "testscheduler";

//集群模式下设置为auto,自动获取实例的id,集群下一定要id不一样,不然不会自动恢复

properties["quartz.scheduler.instanceid"] = "auto";

properties["quartz.threadpool.type"] = "quartz.simpl.simplethreadpool, quartz";

properties["quartz.threadpool.threadcount"] = "25";

properties["quartz.threadpool.threadpriority"] = "normal";

properties["quartz.jobstore.misfirethreshold"] = "60000";

properties["quartz.jobstore.useproperties"] = "false";

ischedulerfactory factory = new stdschedulerfactory(properties);

return await factory.getscheduler();

}

然后是测试代码:

public async task testjob()

{

var sched = await getscheduler();

//console.writeline("***** deleting existing jobs/triggers *****");

//sched.clear();

console.writeline("------- initialization complete -----------");

console.writeline("------- scheduling jobs ------------------");

string schedid = sched.schedulername; //sched.schedulerinstanceid;

int count = 1;

ijobdetail job = jobbuilder.create<simplerecoveryjob>()

.withidentity("job_" + count, schedid) // put triggers in group named after the cluster node instance just to distinguish (in logging) what was scheduled from where

.requestrecovery() // ask scheduler to re-execute this job if it was in progress when the scheduler went down...

.build();

isimpletrigger trigger = (isimpletrigger)triggerbuilder.create()

.withidentity("triger_" + count, schedid)

.startat(datebuilder.futuredate(1, intervalunit.second))

.withsimpleschedule(x => x.withrepeatcount(1000).withinterval(timespan.fromseconds(5)))

.build();

console.writeline("{0} will run at: {1} and repeat: {2} times, every {3} seconds", job.key, trigger.getnextfiretimeutc(), trigger.repeatcount, trigger.repeatinterval.totalseconds);

sched.schedulejob(job, trigger);

count++;

job = jobbuilder.create<simplerecoveryjob>()

.withidentity("job_" + count, schedid) // put triggers in group named after the cluster node instance just to distinguish (in logging) what was scheduled from where

.requestrecovery() // ask scheduler to re-execute this job if it was in progress when the scheduler went down...

.build();

trigger = (isimpletrigger)triggerbuilder.create()

.withidentity("triger_" + count, schedid)

.startat(datebuilder.futuredate(2, intervalunit.second))

.withsimpleschedule(x => x.withrepeatcount(1000).withinterval(timespan.fromseconds(5)))

.build();

console.writeline(string.format("{0} will run at: {1} and repeat: {2} times, every {3} seconds", job.key, trigger.getnextfiretimeutc(), trigger.repeatcount, trigger.repeatinterval.totalseconds));

sched.schedulejob(job, trigger);

// jobs don't start firing until start() has been called...

console.writeline("------- starting scheduler ---------------");

sched.start();

console.writeline("------- started scheduler ----------------");

console.writeline("------- waiting for one hour... ----------");

thread.sleep(timespan.fromhours(1));

console.writeline("------- shutting down --------------------");

sched.shutdown();

console.writeline("------- shutdown complete ----------------");

}

测试添加两个job,每隔5s执行一次。

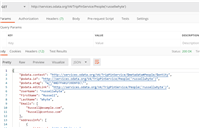

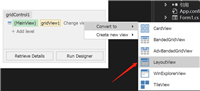

在图中可以看到:job1和job2不会重复执行,当我停了job2时,job2也在job1当中运行。

这样就可以实现分布式部署时的问题了,quzrtz.net的数据库结构随便网上找一下,运行一些就好了。

截取几个数据库的数据图:基本上就存储了一些这样的信息

jobdetail

触发器的数据

这个是调度器的

这个是锁的

1.job的介绍:有状态job,无状态job。

2.misfire

3.trigger,cron介绍

4.第一部分的改造,自己实现一个基于在hostedservice能够进行分布式调度的job类,其实只要实现了这个,其他的上面讲的都没有问题。弃用quartz的表的行级锁。因为这并发高了比较慢!!

个人还是没有测试出来这个requestrecovery。怎么用过的!!

如对本文有疑问,请在下面进行留言讨论,广大热心网友会与你互动!! 点击进行留言回复

Blazor server side 自家的一些开源的, 实用型项目的进度之 CEF客户端

.NET IoC模式依赖反转(DIP)、控制反转(Ioc)、依赖注入(DI)

vue+.netcore可支持业务代码扩展的开发框架 VOL.Vue 2.0版本发布

网友评论