神枪狙击2013粤语,冯长革简介,房贷政策

org.apache.spark.sparkexception: job aborted due to stage failure: task 1 in stage 29.1 failed 4 times, most recent failure: lost task 1.3 in stage 29.1 (tid 466, magnesium, executor 4): java.lang.runtimeexception: java.lang.reflect.invocationtargetexception

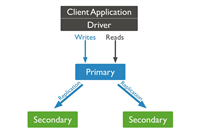

我是在sparkstreaming查询cassandra时遇到这个报错的。 每个批次中,首先检查cluster和session,是否都close,没有close会报这个错误。 若还没有解决,需要检查netty的版本。 推荐在idea中安装maven helper插件。然后把冲突的低版本netty相关的依赖删掉即可。

at shade.com.datastax.spark.connector.google.common.base.throwables.propagate(throwables.java:160)

at com.datastax.driver.core.nettyutil.neweventloopgroupinstance(nettyutil.java:136)

at com.datastax.driver.core.nettyoptions.eventloopgroup(nettyoptions.java:99)

at com.datastax.driver.core.connection$factory.

at com.datastax.driver.core.cluster$manager.init(cluster.java:1410)

at com.datastax.driver.core.cluster.init(cluster.java:159)

at com.datastax.driver.core.cluster.connectasync(cluster.java:330)

at com.datastax.driver.core.cluster.connect(cluster.java:280)

at streamingintegrationkafkabac$$anonfun$main$1$$anonfun$apply$1.apply(streamingintegrationkafkabac.scala:155)

at streamingintegrationkafkabac$$anonfun$main$1$$anonfun$apply$1.apply(streamingintegrationkafkabac.scala:144)

at org.apache.spark.rdd.rdd$$anonfun$foreachpartition$1$$anonfun$apply$29.apply(rdd.scala:935)

at org.apache.spark.rdd.rdd$$anonfun$foreachpartition$1$$anonfun$apply$29.apply(rdd.scala:935)

at org.apache.spark.sparkcontext$$anonfun$runjob$5.apply(sparkcontext.scala:2074)

at org.apache.spark.sparkcontext$$anonfun$runjob$5.apply(sparkcontext.scala:2074)

at org.apache.spark.scheduler.resulttask.runtask(resulttask.scala:87)

at org.apache.spark.scheduler.task.run(task.scala:109)

at org.apache.spark.executor.executor$taskrunner.run(executor.scala:345)

at java.util.concurrent.threadpoolexecutor.runworker(threadpoolexecutor.java:1142)

at java.util.concurrent.threadpoolexecutor$worker.run(threadpoolexecutor.java:617)

at java.lang.thread.run(thread.java:745)dataframe.foreachpartition { part =>

val poolingoptions = new poolingoptions

poolingoptions

.setcoreconnectionsperhost(hostdistance.local, 4)

.setmaxconnectionsperhost(hostdistance.local, 10)

val cluster = cluster

.builder

.addcontactpoints("localhost")

.withcredentials("cassandra", "cassandra")

.withpoolingoptions(poolingoptions)

.build

val session = cluster.connect("keyspace")

part.foreach { item =>

// 业务逻辑

}

cluster.close()

session.close()

}

如对本文有疑问,请在下面进行留言讨论,广大热心网友会与你互动!! 点击进行留言回复

理解Redis持久化,RDB持久化和AOF持久化的不同处理方式

Redis 两类持久化方式,快照和全量追加日志的不同处理方式

网友评论