流星蝴蝶剑电视剧下载,贵安,齐一心怎么死的

入门级爬虫:只抓取书籍名称,信息及下载地址并存储到数据库

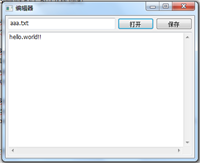

数据库工具类:dbutil.py

import pymysql

class dbutils(object):

def conndb(self): #连接数据库

conn=pymysql.connect(host='192.168.251.114',port=3306, user='root',passwd='b6f3g2',db='yangsj',charset='utf8');

cur=conn.cursor();

return (conn,cur);

def exeupdate(self,conn,cur,sql): #更新或插入操作

sta=cur.execute(sql);

conn.commit();

return (sta);

def exedelete(self,conn,cur,ids): #删除操作 demo 没用到

sta=0;

for eachid in ids.split(' '):

sta+=cur.execute("delete from students where id=%d"%(int(eachid)));

conn.commit();

return (sta);

def exequery(self,cur,sql): #查找操作

effect_row = cur.execute(sql);

return (effect_row,cur);

def connclose(self,conn,cur): #关闭连接,释放资源

cur.close();

conn.close();

if __name__ == '__main__':

dbutil = dbutils();

conn,cur = dbutil.conndb();

书籍操作文件 bookope.py

from dbutil import dbutils

from bookinfo import book

from bookinfo import downloadinfo

import logging

logging.basicconfig(

level=logging.info

)

class bookoperator(object):

def __addbook(self,book):

logging.info("add book:%s" % book.bookname);

dbutil = dbutils();

conn,cur = dbutil.conndb();

insertbooksql = ("insert into book (bookname,bookurl,bookinfo) values ('%s','%s','%s');"%(book.bookname,book.downloadurl,book.maininfo));

dbutil.exeupdate(conn,cur,insertbooksql);

dbutil.connclose(conn,cur);

def __selectlastbookid(self):

logging.info("selectlastbookid ");

dbutil = dbutils();

conn,cur = dbutil.conndb();

selectlastbooksql = "select id from book order by id desc limit 1";

effect_row,cur = dbutil.exequery(cur,selectlastbooksql);

bookid = cur.fetchone()[0];

dbutil.connclose(conn,cur);

return bookid;

def __addbookdownloadinfos(self,downloadinfos,bookid):

logging.info("add bookid:%s" % bookid);

dbutil = dbutils();

conn,cur = dbutil.conndb();

for downloadinfo in downloadinfos:

insertbookdownloadinfo = ("insert into book_down_url (bookid,downname,downurl) values ('%s','%s','%s');"%(bookid,downloadinfo.downname,downloadinfo.downurl));

dbutil.exeupdate(conn,cur,insertbookdownloadinfo);

dbutil.connclose(conn,cur);

def addbookinfo(self,book):

logging.info("add bookinfo:%s" % book.bookname);

self.__addbook(book);

bookid = self.__selectlastbookid();

self.__addbookdownloadinfos(book.downloadinfos,bookid);

if __name__ == '__main__':

bookope = bookoperator();

book = book("aaa","yang","cccc");

book.adddownloadurl(downloadinfo("aaa.html","书籍"));

bookope.addbookinfo(book);

书籍信息文件 bookinfo.py

import sys

sys.encoding = "utf8"

class book(object):

#书籍信息#

def __init__(self,maininfo,downloadurl,bookname):

self.maininfo = maininfo;

self.downloadurl = downloadurl;

self.bookname = bookname;

self.downloadinfos = [];

def adddownloadurl(self,downloadinfo):

self.downloadinfos.append(downloadinfo);

def print_book_info(self):

print ("bookname :%s" % (self.bookname));

class downloadinfo(object):

#下载信息#

def __init__(self,downurl,downname):

self.downurl = downurl;

self.downname = downname;

def print_down_info(self):

print ("download %s - %s" % (self.downurl,self.downname));

51job界面解析文件 fiveonejobfetch.py

import requests

from bs4 import beautifulsoup

import sys

from bookinfo import book

from bookinfo import downloadinfo

import logging

sys.encoding = "utf8"

class pagefetch(object):

host = "//www.jb51.net/"; #域名+分类

category = "books/"; #具体请求页

def __init__(self,pageurl):

self.pageurl = pageurl; #完整url

self.url = pagefetch.host+pagefetch.category + pageurl;

def __getpagecontent(self):

req = requests.get(self.url);

if req.status_code == 200:

req.encoding = "gb2312";

strtext = req.text;

return strtext;

else:

return "";

def getpagecontent(url):

req = requests.get(url);

if req.status_code == 200:

req.encoding = "gb2312";

strtext = req.text;

return strtext;

else:

return "";

def __getmaxpagenumandurl(self):

fetchurl = self.pageurl;

#获取分页地址 分页url 形如 list45_2.html 2为页号#

maxpagenum = 0;

maxlink = "";

while maxlink == "":

url = pagefetch.host+pagefetch.category +fetchurl;

reqcontent = pagefetch.getpagecontent(url)

soup = beautifulsoup (reqcontent,"html.parser");

for ul in soup.select(".plist"):

print ("数据");

print (ul);

maxpagenum = ul.select("strong")[0].text;

alink = ul.select("a");

if alink[-1]['href'] == "#":

maxlink = alink[1]['href'];

else:

fetchurl = alink[-1]['href'];

return maxpagenum,maxlink;

def __formatpage(self,pagenum):

#格式化url 形如 list45_2.html#

linebeginsite = self.pageurl.index("_")+1;

docbeginsite = self.pageurl.index(".");

return self.pageurl[:linebeginsite]+str(pagenum+1)+self.pageurl[docbeginsite:];

def getbookpagelist(self):

#获取书籍每页的url#

shortpagelist = [];

maxpagenum,urlpattern = self.__getmaxpagenumandurl();

for i in range(int(maxpagenum)):

shortpagelist.append(self.host +self.category+ self.__formatpage(i));

return shortpagelist;

def getdownloadpage(url):

downpage= [];

reqcontent = pagefetch.getpagecontent(url);

soup = beautifulsoup (reqcontent,"html.parser");

for a in soup.select(".cur-cat-list .btn-dl"):

downpage.append(pagefetch.host+a['href']);

return downpage;

def getbookinfo(url):

logging.info("获取书籍信息url:%s" % url);

reqcontent = pagefetch.getpagecontent(url);

soup = beautifulsoup (reqcontent,"html.parser");

maininfo = (soup.select("#soft-intro"))[0].text.replace("截图:","").replace("'","");

title = (soup.select("dl dt h1"))[0].text.replace("'","");

book = book(maininfo,url,title);

for ul in soup.select(".ul_address"):

for li in ul.select("li"):

downloadinfo = downloadinfo(li.select("a")[0]['href'],li.select("a")[0].text);

book.adddownloadurl(downloadinfo);

return book;

if __name__ == '__main__':

p = pagefetch("list152_1.html");

shortpagelist = p.getbookpagelist();

downpage= [];

for page in shortpagelist:

downloadpage = pagefetch.getdownloadpage(page);

downpage = downpage+downloadpage;

print ("================汇总如下===============================");

for bookdownloadpage in downpage:

book = pagefetch.getbookinfo(bookdownloadpage);

print (book.bookname+":%s" % book.downloadurl);

for d in book.downloadinfos:

print ("%s - %s" % (d.downurl,d.downname));

# p = pagefetch("list977_1.html");

# p = p.getmaxpagenumandurl();

# print (p);

执行文件,以上文件copy在相同的文件夹下 执行此文件即可 51job.py

from fiveonejobfetch import pagefetch

from bookinfo import book

from bookinfo import downloadinfo

from bookope import bookoperator

def main(url):

p = pagefetch(url);

shortpagelist = p.getbookpagelist();

bookoperator = bookoperator();

downpage= [];

for page in shortpagelist:

downloadpage = pagefetch.getdownloadpage(page);

downpage = downpage+downloadpage;

for bookdownloadpage in downpage:

book = pagefetch.getbookinfo(bookdownloadpage);

bookoperator.addbookinfo(book);

print ("数据抓取成功:"+url);

if __name__ == '__main__':

urls = ["list152_35.html","list300_2.html","list476_6.html","list977_2.html","list572_5.html","list509_2.html","list481_1.html","list576_1.html","list482_1.html","list483_1.html","list484_1.html"];

for url in urls:

main(url);

数据库表:书籍信息表和下载地址表

create table `book` ( `id` int(11) not null auto_increment, `bookname` varchar(200) null default null, `bookurl` varchar(500) null default null, `bookinfo` text null, primary key (`id`) ) collate='utf8mb4_general_ci' engine=innodb auto_increment=2936;

create table `book_down_url` ( `id` int(11) not null auto_increment, `bookid` int(11) not null default '0', `downname` varchar(200) not null default '0', `downurl` varchar(2000) not null default '0', primary key (`id`) ) collate='utf8mb4_general_ci' engine=innodb auto_increment=44441;

git地址:https://git.oschina.net/yangsj/bookfetch/tree/master

如对本文有疑问,请在下面进行留言讨论,广大热心网友会与你互动!! 点击进行留言回复

Python 实现将numpy中的nan和inf,nan替换成对应的均值

python爬虫把url链接编码成gbk2312格式过程解析

网友评论